Four stories baking Apple recently. If you needed proof that they are keeping programmers busy writing code for patches then read on…

FaceID fake-out using two dollars at the dollar store

“Some of our most sophisticated technologies — the TrueDepth camera system, the Secure Enclave and the Neural Engine — make FaceID the most secure facial authentication ever in a smartphone.” These are the words Apple use to describe their FaceID biometric authentication system.

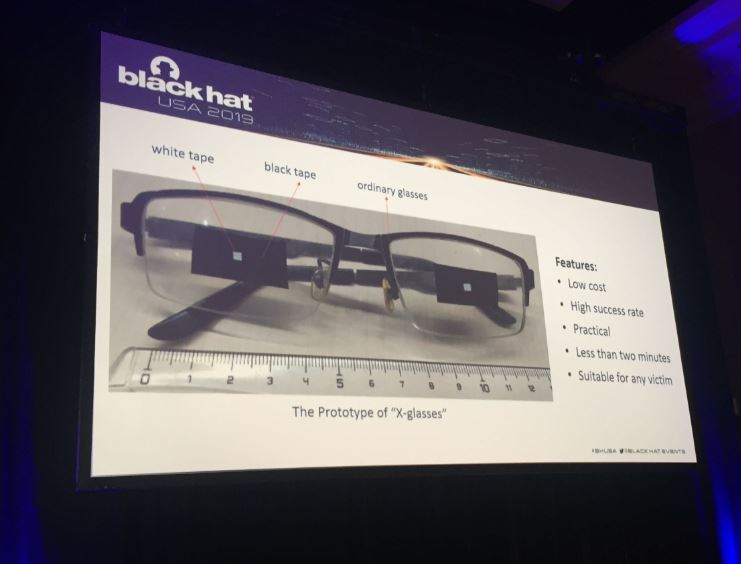

Face ID can recognize even when the device owner put on glasses. It even works with many types of sunglasses. Also, FaceID has a feature called “liveness” detection which will prevent anyone from unlocking a device when the owner of the device is unconscious. Researchers took advantage of these two features to bypass FaceID. When a user is wearing glasses, FaceID won’t extract 3D information from the eye area.Researchers bypass Apple’s FaceID using regular glasses and a tape

During Black Hat USA 2019, researchers demonstrated a way to bypass FaceID and log into a locked phone by simply using a pair of modified glasses on device owner’s face.

Apple Antitrust problems in Japan

Apple is known for its strong-arm tactics. Japan’s Fair Trade Commission has now launched an official investigation on Apple for violating anti-monopoly rules.

Apple strong-armed component manufacturers in Japan

Get Spam text and you’re hacked

Silanovich worked with Project Zero member Samuel Groß to investigate whether other forms of messaging including SMS, MMS and visual voicemail were compromised. After reverse engineering and looking for flaws, she discovered multiple exploitable bugs in iMessage.

The reason is thought to be that iMessage offers such a range of communication options and features, which make mistakes and weaknesses more likely- e.g. Animojis, rendering files like photos and videos and integration with other apps, including Apple Pay, iTunes, Airbnb etc.

An interactionless bug that stood out was one that allowed hackers to extract data from a user’s messages. The bug would allow the attacker to send specifically crafted texts to the target, in exchange for the content of their SMS messages or images, for example.

While iOS usually has protections in place that would block the attack, this bug takes advantage of the system’s underlying logic, so iOS’s defences interpret it as legitimate.iMessage bug lets you get hacked with just one message

Since these bugs don’t require any action from the victim, they are favoured by vendors and nation-state hackers.

Siri, Alexa, & Cortana are always listening and so are 3rd parties

An Apple contractor confirmed that there have been countless instances of recordings featuring private discussions between doctors and patients, business deals, seemingly criminal dealings, sexual encounters and so on. More serious issue is that these private recordings are accompanied by user data including location, contact details, and app data.

Apple contractors can no longer listen to your private conversations via Siri

Apple stock is doing just fine but the optics of the above stories certainly doesn’t look good.